Fully scaled quantum technology is still a way off, but some banks are already thinking ahead to the potential value.

.

Many financial services activities, from securities pricing to portfolio optimization, require the ability to assess a range of potential outcomes. To do this, banks use algorithms and models that calculate statistical probabilities. These are fairly effective but are not infallible, as was shown during the financial crisis a decade ago, when apparently low-probability events occurred more frequently than expected.

In a data-heavy world, ever-more powerful computers are essential to calculating probabilities accurately. With that in mind, several banks are turning to a new generation of processors that leverage the principles of quantum physics to crunch vast amounts of data at superfast speed. Google, a leader in the field, said in 2019 that its Sycamore quantum processor took a little more than three minutes to perform a task that would occupy a supercomputer for thousands of years. The experiment was subject to caveats but effectively demonstrated quantum computing’s potential, which in relative terms is off the scale.

Financial institutions that can harness quantum computing are likely to see significant benefits. In particular, they will be able to more effectively analyze large or unstructured data sets. Sharper insights into these domains could help banks make better decisions and improve customer service, for example through timelier or more relevant offers (perhaps a mortgage based on browsing history). There are equally powerful use cases in capital markets, corporate finance, portfolio management, and encryption-related activities. In an increasingly commoditized environment, this can be a route to real competitive advantage. Quantum computers are particularly promising where algorithms are powered by live data streams, such as real-time equity prices, which carry a high level of random noise.

The impact of the COVID-19 pandemic has shown that accurate and timely assessment of risk remains a serious challenge for financial institutions. Even before the events of 2020, the last two decades have seen financial and economic crises that led to rapid changes in how banks and other market participants assessed and priced risk of different asset classes. This led to the introduction of increasingly complex and real-time risk models powered by artificial intelligence but still based on classical computing.

The arrival of quantum computing is potentially game changing, but there is a way to go before the technology can be rolled out at scale. Financial institutions are only just starting to get access to the necessary hardware and to develop the quantum algorithms they will need. Still, a rising number of initiatives suggest a tipping point is on the horizon. For banks yet to engage, and particularly those that rely on computing power to generate competitive edge, the time to act is now.

.

Back to the future

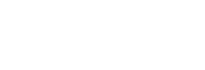

A short theoretical diversion can show how quantum computing represents a step change in computational performance. Quantum computing is based on quantum physics, which reveals the slightly bemusing fact that specific properties of particles can be in two states, or any combination of the two states, at the same time. Whereas traditional computers operate dualistic processing systems, based on 1 and 0s, quantum systems can simultaneously be 1 and 0, or a mixture of 1 and 0. This so-called “superposition” releases processing from binary constraints and enables exploration of immense computational possibilities.

The answers produced by quantum calculations are also different from their binary cousins. Like quantum physics, they are probabilistic rather than deterministic, meaning they can vary even when the input is the same. In practice, this means that the same calculation must be run multiple times to ensure its outputs converge toward a mean.

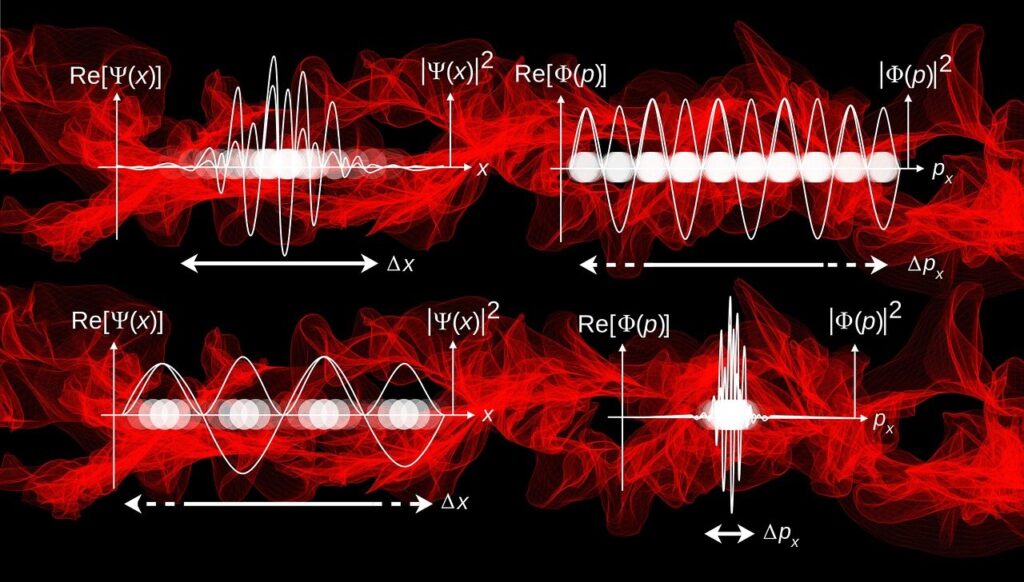

To obtain a quantum state that can be brought into superposition, a quantum computer works not with bits but with quantum bits (qubits), which can be engineered as atomic nuclei, electrons, or photons (Exhibit 1). Through superposition, an amount of N qubits can express the same amount of information as 2^N classical bits, although this informational richness is not accessible to us because the N qubits will “collapse” back to behave like N classical bits when measured. But before that, while still in their (unobserved) state of quantum superposition, 2 qubits can be in the same amount of states as 4 classical bits, 4 qubits the same as 16 bits, 16 qubits the same as 65,536 bits, and so on. A system of 300 qubits can reflect more states than there are atoms in the universe. A computer based on bits could never process that amount of information, which is why quantum computing represents a true “quantum leap” in terms of capability.

.

.

Another characteristic of quantum states is that qubits can become entangled, which means they are connected and that actions on one qubit impact the other, even if they are separated in space—a phenomenon Albert Einstein described as “spooky action at a distance.” Quantum computers can entangle qubits by passing them through quantum logic gates. For example, a “CNOT” (conditional not) gate flips—or doesn’t flip—a qubit based on the state of another qubit.

Both superposition and entanglement are critical for the computational “speedup” associated with quantum computing. Without superposition, qubits would behave like classical bits, and would not be in the multiple states that allow quantum programmers to run the equivalent of many calculations at once. Without entanglement, the qubits would sit in superposition without generating additional insight by interacting. No calculation would take place because the state of each qubit would remain independent from the others. The key to creating business value from qubits is to manage superposition and entanglement effectively.

.

An intermediate step

At the leading edge of the quantum revolution, companies such as IBM, Microsoft, and Google are building quantum computers that aim to do things that classical computers cannot do or could only do in thousands of years. However, the notion of “quantum supremacy”—that is, proof of a quantum computer solving a problem that a classic computer could not tackle in a reasonable amount of time—is predicated on assembling a sufficient number of qubits in a single machine. The world’s leading developers have got up to around 60 qubits, which is enough to put the world’s most powerful computers to the test but arguably not to outperform them. That was, until Google reported having achieved quantum supremacy for the problem of random number generation, using just 53 qubits assembled in their Sycamore processor.1

In spite of this milestone, no one has yet managed to scale quantum computers for them to be useful for practical applications. Some of the calculations relevant to the financial industry would require hundreds or thousands of qubits to resolve. Given the pace of development, however, the timescale for obtaining sufficient capacity is likely to be relatively short—perhaps five to ten years.

However, capacity is only half the story. Qubits are notoriously fickle. Even a tiny change in the environment, such as a heat fluctuation or radio wave, can upset their quantum state and force them back into a classical state in which speedup evaporates. The more qubits are present, the more unstable the system becomes. Qubits also suffer from correlation decay, known as “decoherence.”

One route to stability is to keep quantum chips at sub-zero temperatures (in some cases 250 times colder than deep space) and in an isolated environment. However, there is no avoiding the fact that the hardware challenge is significant. The world is still waiting for the first quantum processor with more than a hundred qubits that can operate in a coherent manner, that is, with a fidelity in excess of 99 percent.2 But there is a theoretical intermediate step, which is the so-called quantum annealer. Quantum annealers focus on a single class of tasks, known as discrete optimization problems, which are based on a limited number of independent variables.

Among other things, an annealer may be used to execute hill climbing algorithms: an optimization approach that is analogous to exploring a range of mountains. In seeking the highest peak (or lowest valley), a classic algorithm measures each in turn. A quantum annealer explores all at once by “flooding” the landscape and raising the water level until only the highest sticks out. The good news for the financial industry is that a high number of essential algorithmic tasks are optimization problems; portfolio optimization is an example.

.

Who stands to benefit and how?

In assessing where quantum computing will have most utility, its useful to consider four capital markets industry archetypes: sellers, buyers, matchmakers (including trading platforms and brokers), and rule setters. Generally, sellers and matchmakers invest in IT to build capacity rather than address complexity. Buyers and rule setters, on the other hand, often require more complex models. Quant-driven hedge funds, for example, aim to profit through analytical complexity. This would make them a natural constituency for ultra-powerful processing. Large banks, which take on multiple roles in financial markets, are also significant early experimenters.

Quick wins for quantum computing are most likely in areas where artificial intelligence techniques such as machine learning have already improved traditional classification and forecasting. These typically involve time series problems, which are often focused on large, unstructured data sets and where problems require live data streams as opposed to batch processing or one-time insight generation.

One unique characteristic of the logic gates in quantum computers is that they are reversible, which means that, unlike classical logic gates, they come with an undo button. In practical terms, this means they never lose information up to the point of measurement, when qubits revert to behaving like classical bits. This benefit can be useful in the area of explainability. An algorithm used to predict a loan default may determine whether individuals are granted mortgages and on what terms. However, if a customer’s loan application is rejected, they may reasonably wish to understand why. In addition, the law in some cases requires a degree of explainability. In the United States, credit scores and credit actions are subject to the Equal Credit Opportunity Act, which compels lenders to provide specific reasons to borrowers for negative decisions.

In terms of the current state of the art, we see four key drivers of demand for quantum computing:

.

Scarcity of computational resources

Companies relying on computationally heavy models (e.g., hedge fund WorldQuant, which has more than 65 million machine learning models) employ a Darwinian system to allocate virtual computing capacity; if model X performs better than model Y, then model X gets more resources and model Y gets less. The cost of classical processing power, which rises exponentially with model complexity, is a bottleneck in this business model. This could be unlocked by the exponential speed-up delivered by qubits over classical bits.

.

High-dimensional optimization problems

Banks and asset managers optimize portfolios based on computationally intense models that process large sets of variables. Quantum computing could allow faster and more accurate decision-making, for example determining an optimal investment portfolio mix.

.

Combinatorial optimization problems

Combinatorial optimization seeks to improve an algorithm by using mathematical methods either to reduce the number of possible solutions or to make the search faster. This can be useful in areas such as algorithmic trading, for example helping players select the highest bandwidth path across a network.

.

Limitations in cryptography

Current cryptographic protocols rely on the fact that conventional computers cannot factor large numbers into their underlying prime factors. This would not be the case for quantum computers. Using a sequence of steps known as Shor’s algorithm, they could at some stage deliver an exponential increase in speed of prime factorization and thus be able to “guess” the prime factors used in the encryption. On the other hand, quantum encryption would be sufficiently powerful to prevent intrusion by even the most powerful classical or quantum computers.

.

December 18, 2020, Published on McKinsey and Company